Study on Efficient Models

Download full version of this notes with more details and images here.

Efficient Methods

The energy is mainly consumed in DNNs by data transferring(in memory), and MAC(Multiplication & Accumulation) operations. In order to reduce the energy consumption, we need methods or models that reducing the cost of memory and compute in DNNs, that is, the Neural Network Compression.

According to recent paper surveries [0][1][3], we can classifies the current existing efficient compression methods by their different objectives in the following way:

| CLASS | OBJECTIVE | MAJOR METHODS |

|---|---|---|

| Compact Model | Design smaller base models that can still achive acceptable accuracy. | Efficient Lightweight DNN Models |

| Tensor Decomposition | Decompose bloated original tensors into more smaller tensors. | – |

| Data Quantization | Reduce the number of data bits. | Network Quantization |

| Network Sparsification | Sparse the computational graph(number of connection/neurons). | Network Pruning |

| Knowledge Transferring | Learning output distributions of trained large model. | Knowledge Distillation |

| Architecture Optimazation | Optimaze the model parameters. | NAS, Genetic Algorithm |

Compact Model

Mordern DNNs` performence impovement is mainly driven by deeper and wider networks with increaseed parameters and operations(MACs)[0], the compact models tends to reduce overhead while maintaining accuracy as much as possible.

Compact models try to design smalleer based models by allowing deeper/wider networks with expanded FMs, more complex branch topology, and more flexible Conv arithmetic. They can be classified based on the following two aspects:

- Ensure large spatial correlations(Receptive Field) while remains compact.

- Ensure deeper channel correlations while remains compact.

Overview on Current DNN Models

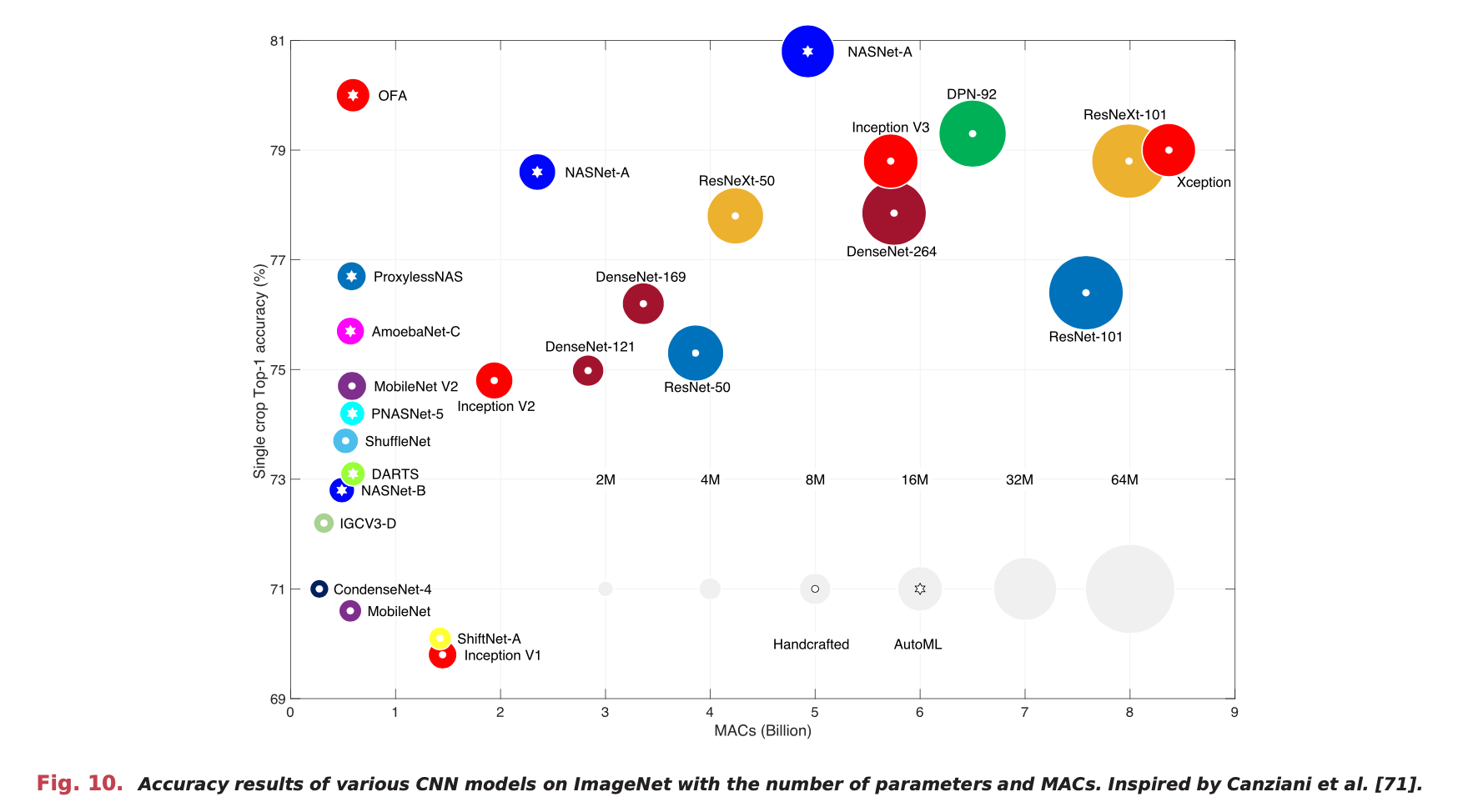

I have taken an overview on most of the popular models that are proposed in the DNN field, all of them have different characteristics over network accuracy, speed, and size. Taken the example from the paper[0] which summerize the most frequently used DNN models:

From above illustrated models, the following DNN models are typically being referred as Lightweight Models:

- SqueezeNets

- ShuffleNets

- MobileNets

- GoogleNet (Inception)

- EfficientNets

- NasNets

- DenseNets

…

I will then evaluate each of them by understanding the foundamentals of the idea, implementations, as well as the comparisons & commonalities between them.

MobileNets

Main Idea

Paper presented on 2017 by Google [3]. Lightweighted Deep Convolutional Neural Network with drastic number of parameters and calculation operations drop while maintaining reasonable accuracy. Targeting to deploy or train on edge devices with less power consumption, as well as the model size, together with faster inference time (latency).

The main idea used in MobileNet is construct relatively compact deep neural networks with use a form of factorized convolutions, namely Depthwise Separable Convolution, to enable both spitial correlations and channel correlations.

Different from the conventional convolution operations, Depthwise Separable Convolution is performed as follows:

- [Depthwise Convolution] Apply one single filter to each channel of input featuree map. Ensures the spatial correlation.

- [Pointwise Convolution] Apply filter of size 1x1 to the combined output of the Depthwise Convolution. Ensures the channel correlation.

Depthwise Separable Convolution performs convolution channel by channel, then combines the resulting tensors by using Nx1x1x(#Channel) fileters to produce output. Saving dramastic parameters comparing with the standard method.

MobileNet V2

Theory

Intuitively, the computation & memory cost of DNN models depend largely on the input resolutions and the number of tensors channels of the inner computing graph. Conventional convolution methods extracts feature information by internal Conv kernels that map the input into higher dimensions(channels) to allow the original image to form a “manifold of interest”[3] we wish to learn, by set of those inner-layer activations. Since it has been long assumed that manifolds of interest in DNNs could be embedded in low-dimensional subspaces, in order to design compact models, we can reduce the operation space by simply reducing the dimensionality of the layers, while remaining the shape of the “manifold of interest”.

MobileNet V1 uses width multiplier/resolution multipliers[2] to allow tradeoffs between computation and accuracy. Following above intuition, those multipliers allows one to reduce the dimensionality until the manifold of interests spans the entire space[3].

However, due to the non-linear property of Conv layers, the “manifold of interests” may collapse. The paper[3] gives an intuitive illustration of this idea by embedding low-dimensional manifolds into n-dimensional spaces.

It is can be observed that the information losses a lot when low-dimentional(e.g. when n=2,3) manifold is transformed with \(ReLU\); whereas the information mostly reserved when high-dimentional manifold is transformed with \(ReLU\).

This result suggesting use more dimensionalities as possible, and it is conflicting with the idea of lightweighted models including MobileNet V1.

MobileNet V2 is trying to address this problem, preventing the information loss, as well as remaining even lower parameters/memory usage.

Main Idea

The insights used for MobileNet V2 architecture can be views as the follows:

- Depthwise Separable Convolution - Saving parameter and operation complexities.

- Linear Bottlenecks - Using the partial non-linearity property of \(ReLU\) function, insert a linear transformation after high-dimensional \(ReLU\) transformation to address the conflict of complexity and manifold collapsion.

- Inverted Residuals - More memory efficient to shortcut connections between linear layers. (When stride=2 this can be optional according to the paper.)

This design is named the Bottleneck Residual Block, with a 1x1 filter performing transformation to increase the dimensionality of input activated by \(ReLU6\), then perform 3x3 depthwise convolution, subsequently using another 1x1 filter to perform linear transformation to produce the output, reduce the dimensions as well as preventing lose of information. Lastly, add shortcut connections between consecutive linear layers to improve the ability of a gradient to propagate across layers. Each layer is followed by a batnormalization.

MobileNet V3

Main Idea & Background Knowledge

Proposed by Google in 2019. The MobileNet V3 achives better performance, with \(3.2\%\) more accurate on ImageNet classification while reducing latency by \(20\%\), in comparison to previous MobileNet V2. It is mainly constructed from the following ideas:

- Bottleneck Block with SENet block introduced.

- 2 Neural Architecture Search algorithms are used, for block optimization and layer optimization.

- Redesigned some of the redundent expensive structures.

- New Nonlinearities.

In order to understand the design details of MobileNet V3, we need to firstly take a look at Mnasnet[5] & Netadapt[6] & SENet[8].

Mnasnet: Platform-Aware NAS for Mobile [5] ___ Proposed by Google in 2019, Mnasnet is an Neural Architecture Search guilded auto designed model, its main conribution is proposed that the conventional FLOPS proxy used widely in NAS methods are not the accurate approximation of the model lantency, it novelly used the real lantency measuremeants from running model on physical devices and thus guild the design.

The RNN controller firstly sample model parameters from the search space, then the trainer excutes with selected parameters, and accordingly evaluate the accuracy of the current model, the model is subsequently passed into real mobile phones to obtain the real lantency, together with model accuracy and latency, compute the manually definded reward and feedback to the controller.

The objective function for Plateform-Aware NAS is definded as: \(\max_{m} \quad ACC(m)\\ w.r.t. \quad LAT(m) \le T\) However, given the computational cost of performing NAS, we are more interested in finding multiple Pareto-optimal solutions in a single architecture search, instead of maximize a single metric.

Plateform-Aware NAS uses a customized weighted product method to approximate Pareto optimal solutions, the goal is defined to: \(\max_{m} \quad ACC(m) \times [\frac{LAT(m)}{T}]^w\) In which the \(w\) is the weight factor defined as: \(w = \begin{cases} \alpha & LAT(m) \le T \\ \beta & otherwise \end{cases}\) Empirically, the paper suggests that doubling the latency usually brings about 5% higher accuracy gain, by idea of obtaining Pareto-optimal, given two models, (1) M1 has latency \(l\) and accuracy \(a\), (2) M2 has latency \(2l\) and accuracy \(1.05a\), they should have similar reward, definded as follows: \(Reward(M1)=a·(\frac{l}{T})^\beta \approx Reward(M2)=a·(1+5\%)·(\frac{2l}{T})^\beta\) Solving for above equation gives \(\beta \approx -0.07\), Plateform-Aware NAS uses \(\alpha = \beta = -0.07\) in their experiments unless explicitly stated.

Netadapt: Platform-aware neural network adaptation for mobile applications [6] ___ Proposed by Google in 2018. Netadapt is an layer by layer network compression algorithm, which alters the number of filters in each layer to obtain given resource budget, for a certain pre-trained model.

It is propsed in the pape, that similar to [5], the traditional measurements of #parameters and #MACs might not be sufficient to conclude the latency and energy consumption. Netadapt also uses the direct metric to guild the filters pruning.

One main difference between Netadapt filters pruning and energy-aware pruning[7] is that Netadapt uses empirical matric per layer to estimate the real resouce consumption, so that no further detailed lower-level knowledge is required for estimating the real matrics.

The problem can be formulated as the following: \(\max_{Net} \quad Acc(Net) \\ w.r.t. \quad Res_j(Net_i) \le Bud_j, \ j \ = \ 1,...,m\) Whereas in Netadapt, considering maintaining the accuracy needs re-train for each alternation step, it breaks above problem into following series of easier problems and solves it iteratively: \(\max_{Net_i} \quad Acc(Net_i) \\ w.r.t. \quad Res_j(Net_i) \le Res_j(Net_{i-1}) - \Delta R_{i,j}, \ j \ = \ 1,...,m\) Where \(\Delta R_{i,j}\) is called “Resource Reduction Schedule”, similar to the concept of learning rate schedule, It is an hyper-parameter stands for the reduction step size for each iteration.

The algorithm iteratively find solutions that within current resource budget in each iteration, layer-by-layer, perform ShortTermFineTune after each filter-pruned layer. Each time a layer is pruned, a new network with only that specific layer is pruned is generated and stored.

After all the layers have been evaluated, select one network with the highest accuracy from the stored \(K\)(Equal to number of layers) networks.

Repeat the process until the budget limitation is satisified.

Squeeze-and-Excitation Networks [8] ___

The paper introduced a method to emphasis the channel-wise features and their correlations by “Attention” mechanism. In which for a convolution output \(U=X*F_{tr}\), where \(X\) is the input and \(F_{tr}\) is the standard convolution operation, we can plug in behind an SE block to boost feature discriminability.

Such design won the first place in the ILSVRC 2017 classification competition with top performing model ensemble achieves a \(2.251\%\) top-5 error on the test set, that is \(\approx25\%\) relative improvement compared with previous winner.

Now back to MobileNet V3. There are two types of MobileNet V3 models according to the paper, the MobileNetV3-Large & MobileNetV3-Small, targeted at high and low resource use cases respectively.

Block Structure

The MobileNet V3 integrates lightweight attention module Squeeze and Excitation into its bottleneck block structure, where the SE block is placed after depthwise convolution inthe expansion for attention to be applied on the largest representation. As can be observed from above. Furthermore, MobileNet V3 uses compression rate as \(\frac{1}{4}\) in fully connected layers, by expiriments, doing so increases the accuracy at the modest incrase of number of parameters, and with no discernible latency cost.

Network Search

1 - Block-wise Search

MobileNet V3 used Platform-aware Neural Architecture Search approach for large mobile models, and found the similar results as in [5], therefore MobileNet V3 simply reuse the same MnasNet-A1 as initial large mobile model.

However, by observations that small mobile is more lantency-sensitive, in other words the model accuracy changes more dramatically with lantency for small models, the original assumption made in [5] for the empirical accuracy-lantency rate might not be suitable. Therefore, MobileNetV3-Small uses weight factor \(w=-0.15\) insteead of \(w=-0.07\).

2 - Layer-wise Search

After the rough blocks architecture is defined, MobileNet V3 then uses NetAdapt as complimentary to search for optimal individual layer configurations in a sequential manner, rather than trying to infer coarse but global architecture.

| MobileNet V3 has modifed the algorithm by selecting the final proposals by one that maximize $$\frac{\Delta Acc}{ | \Delta Latency | }\(, in which $\Delta Latency$ satisifies reduction schedule\)\Delta R$$. The intuition is that because our proposals are discrete, we prefer proposals that maximize the slope of the trade-off curve. |

| By setting $$\Delta R = 0.01 | L | \(and\)T=10000$$, where $L$ is the lantency of the original model, and $T$ is the number of iterations for excuting NetAdapt algorithm. Like did in [6], the proposals for MobileNet V3 are allowed from the following two types of altering: |

- Reduce the size of any expansion layer;

- Reduce bottleneck in all blocks that share the same bottleneck size to maintain residual connections.

Redesigning Expensive Layers

The current model based on MobileNet V2 uses 1x1 pointwise convolution as the final layer for dimension expansion, and then after Avg Pooling + anotehr 1x1 convolution, reduce the both channel size and feature map size to prodece output. The final dimension expansion layer is important as it ensures rich features for prediction, however, this is lantency-expensive.

MobileNet V3 moves this layer past the final average pooling. This makes the computation of the features becomes nearly free in terms of computation and latency.

By moving this layer after average pooling, the previous projection layer is no longer needed to reduce the computation, thus the projection layer and its 3x3 depth filtering layer(Replaced by Average Pooling) of the last bottleneck block are all removed.

Nonlinearities

The piece-wise linear hard analog of $Sigmoid$ and $Swish$ activations is introduces in MobileNet V3, formally: \(hard-Sigmoid[x] = \frac{ReLU6(x+3)}{6} \\ \\ and \\ hard-Swish[x] = x \frac{ReLU6(x+3)}{6} \\ \\\) It is found by the expiriment that those hard functions have no discernible differences than the soft ones in accuracy, but they are:

- More friendly in quantized mode since it eliminates potential numerical precision loss caused by different implementations of the approximate sigmoid.

- h-swish can be implemented as a pice-wise function to reduce the number of memory acesses driving the latency cost down substantially.

In addition, the paper also mentioned that because the cost of applying nonlinearity decreases as we go deeper into the network, due to the reductions of resolution size which halves each layer activation memories everytime, MobileNet V3 only uses h-swish functions in deeper layers(Secound half of the layers). Even with this, the h-swish function still introduce some latency cost, this can be addressed by using optimized implementation based on a piece-wise function.

Inceptions

(Which I call “Thee Width Learning” lol.)

GoogLeNet & Inception V1

GoogLeNet won the first place of 2014 ILSVRC chanllenge, first proposed by Google in 2014, variase impovements are being made afterwards, including InceptionV2(BN), InceptionV3, InceptionV4 and Xception.

Theory

The paper[9] proposed that deeper(blocks)/wider(channels) DNN models are with large number of parameeters and thus more easily to be trained as overfitting model, where for high quality models, the training dataset could be very limited since preparing such dataset is not just trick, but expensive.

Another issue of those models is the increased computational cost and the memory cost, which is very energy-inefficient. The fundamental way of solving both issues that are widely used during that time is sparses the computational graph, however, since the lower-level hardware designs of our computing devices are mostly structured designed, sparse redundent weights randomly does not bring much benefits to the models. Structural Purning proposed later, however, is lossing the accuracy of DNN models.

The paper referenced the main result of [10], which states that:

If the probability distribution of the dataset is representable by a large, very sparse deep neural network, then the optimal network topology can be constructed layer by layer by analyzing the correlation statistics of the activations of the last layer and clustering neurons with highly correlated outputs. __ Neurons That Fire Together, Wire Together. –Hebbian Priciple __ Based on above, the Inception structure is proposed, with dense wider design to approximate local sparsity and aggregate the resulting chennels as the output.

In first attempt from 2(a), the Inception is consistes of combination of all state-of-art sparse layers with their output filter bancks concatenated into a single output. With 1x1 dimension reduction is applied, rather than the naive approach, the Inception block could be extracting more informative information (from difference sparse units + different scale spatial information with multi-kernel sizes), by using above designs, Inception is allowed to increaseing units at each block without computational complexity blowing-up, whilist keeping the lower-level sparse computational away.

An compact model using Inception blcoks is proposed in the paper, namely the GoogLeNet (With 2 additional auxiliary classifiers weighted 0.3 at lower/middle depth).

This design wins VGG by ~1.5% less error rate.

Inception V2 (BatchNorm)

Proposed by Google in 2015 [11]. According the widely used SGD meethod for DNN weights updating, the paper points that the layer output is largely effected by the inputs from the previous layer. Therefore, during training/testing stage, the distributions of the inputs matters to the model. It would be advantageous for the distribution of the inputs remain fixed over time so the network weight does not have to readjust to adapt new distribution. Such distribution transformation of inter-network nodes is called Internal Covariate Shift(ICS).

The paper proposed method called Batch Normalization, which is nowadays commonly used in DL community.

During the backpropagation, the scale parameter $\gamma$ and shift parameter $\beta$ also needs to be learned by passing through the loss $l$ and compute the gradient with respect to these parameters. Batch Normalization also allows learning rate to be set higher without weights blowing-up. Occasionally speaking, with BN, the dropout layer is sometimes discared.

Inception V2 is based on Batch Normalization Method by adding BN after layer transformation, using $ReLU$ as activation functions, and replace the 5x5 Conv by two consecutive 3x3 Conv.

The Inception trained with different larger learning rate achives some result much faster interms of training episodes.

Inception V3

Propsed by Google in 2015. [12] The paper relooked into the inception designs and propsed the following 4 General Design Principles regarding DNNs, that empirically:

- Avoid extreme representational bottlenecks, especially in the early network. Otherwise information could be lost.

- Higher dimensional representations are easier to process locally within a network, hence converges faster.

- Spatial aggregation can be done over lower dimensional embeddings without much or any loss in rep- resentational power.

- Balance network width and depth.

_

More efficient Inception could be achived by Factorizing the Convolution. First, factorize the original convolutions to the combinations of smaller combinations stacking on top of each other, by doing so, we made the in-block network deeper, hence imporved representation power of the non-linearity of the model, while significantly saving parameters and MACs.

Another approach to factorize the convolution, rather than replacing larger convolution by smaller convolutions, is to factorize the original convolution into asymmetric convolution.

Wherea kernel of 3x3 could be disolved into stacking of 1x3 and 3x1, with 33% less parameters.

In addition, the paper proposed an method of down-sampling. The current down-samplings are performed by either:

- Pooling -> Enlarge Dimensions: This will suffer lose of information.

- Enlarge Dimensions -> Pooling: Computationally costy.

The paper proposed a method called Efficient Grid Size Reduction, which leverage the speciality that Inception blocks have units, to parallely perform above actions to produce output.

Such approach achieves $7.8\%$ and $3.8\%$ less error rate compared with InceptionV1 and V2 respectively.

References

[0] Deng, Lei, et al. “Model compression and hardware acceleration for neural networks: A comprehensive survey.” Proceedings of the IEEE 108.4 (2020): 485-532.

[1] Cheng, Yu, et al. “A survey of model compression and acceleration for deep neural networks.” arXiv preprint arXiv:1710.09282 (2017).

[2] Howard, A. G., Zhu, M., Chen, B., Kalenichenko, D., Wang, W., Weyand, T., … & Adam, H. (2017). Mobilenets: Efficient convolutional neural networks for mobile vision applications.

[3] Sandler, M., Howard, A., Zhu, M., Zhmoginov, A., & Chen, L. C. (2018). Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 4510-4520).

[4] Howard, A., Sandler, M., Chu, G., Chen, L. C., Chen, B., Tan, M., … & Adam, H. (2019). Searching for mobilenetv3. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 1314-1324).

[5] Tan, M., Chen, B., Pang, R., Vasudevan, V., Sandler, M., Howard, A., & Le, Q. V. (2019). Mnasnet: Platform-aware neural architecture search for mobile. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 2820-2828).

[6] Yang, T. J., Howard, A., Chen, B., Zhang, X., Go, A., Sandler, M., … & Adam, H. (2018). Netadapt: Platform-aware neural network adaptation for mobile applications. In Proceedings of the European Conference on Computer Vision (ECCV) (pp. 285-300).

[7] Yang, Tien-Ju and Chen, Yu-Hsin and Sze, Vivienne: Designing energyefficient convolutional neural networks using energy-aware pruning. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2017)

[8] Hu, J., Shen, L., & Sun, G. (2018). Squeeze-and-excitation networks. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 7132-7141).

[9] Szegedy, C., Liu, W., Jia, Y., Sermanet, P., Reed, S., Anguelov, D., … & Rabinovich, A. (2015). Going deeper with convolutions. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 1-9).

[10] Sanjeev Arora, Aditya Bhaskara, Rong Ge, and Tengyu Ma. Provable bounds for learning some deep representations. CoRR, abs/1310.6343, 2013.

[11] Ioffe, S., & Szegedy, C. (2015, June). Batch normalization: Accelerating deep network training by reducing internal covariate shift. In International conference on machine learning (pp. 448-456). PMLR.

[12] Szegedy, C., Vanhoucke, V., Ioffe, S., Shlens, J., & Wojna, Z. (2016). Rethinking the inception architecture for computer vision. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 2818-2826).